The Autonomous Enterprise: Thought Experiment

Thought Experiment: A Driver Encounters a Stop Sign

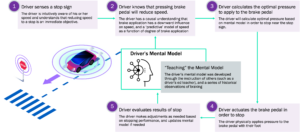

To understand the basic principles of autonomous systems, consider a scenario in which a human driver approaches a stop sign.

First, the driver is aware of a stop sign through his vision capabilities while also being intuitively aware of his speed and rate of acceleration through the physical phenomena of motion that they experience. These sensory capabilities give the driver the ability to observe his current state. Next, the driver has established and immediate objective of reducing speed to a stop directly in front of the stop sign.

Equipped with a sensory understanding of his current state (approaching a stop sign at his current velocity) and his current objective (reducing speed directly in front of the stop sign) the driver may now reference his mental model of how his actions may affect his speed. The driver knows that applying pressure to his brake pedal with his foot will reduce his speed. In other words, the driver has a causal understanding of the relationship between brake actuation and speed reduction.

This mental model is not binary, but subject to degrees of magnitude. Beyond his understanding that brake reduces speed, the driver knows that a hard press on the brake will reduce the speed abruptly, while a soft press will reduce the speed gradually, with a continuous spectrum of magnitude in between. In other words, the driver has a predictive model of speed as a function of degree of brake application. While this predictive model is strongly intuitive and almost subconscious, it is nonetheless robust for most competent drivers.

The driver can utilize this predictive model to determine the optimal braking maneuver. For example, with a sensory understanding of his current speed and position of the stop sign, the driver can deduce the optimal amount of pressure to apply to the brake in order to stop near the stop sign - not too far before and not too far after - and to stop at the desired rate – that is, not to slam on the brake and lose control and not to be too light on the brake so they pass the stop sign altogether. In other words, the driver can mentally optimize his braking process to control the vehicle. Once this optimal reaction is mentally derived, the driver can physically actuate his decision by applying pressure on the brake with his foot.

As the driver actuates the braking process according to his calculated amount of pressure, they receive a continuous feedback loop through his vision and motion-based sensory functions to reinforce the braking action positively or negatively. The feedback allows the driver to continuously refine and adjust his level of braking according to how fast they seem to be slowing down and the position of the stop sign.

With all these cognitive functions available, the driver can safely and successfully arrive at a stop at the desired rate and the desired distance before the stop sign. So how exactly did this driver develop this intelligence and autonomous capability? Some of it was programmed with explicit instructions from others. For example, a driver’s education teacher explicitly explained that to stop, the brake must be pressed. Most of it, however, was learned through a series of historical observations, perhaps as a passenger, or the practice and experiential reinforcement that comes with driving over time.

The process of breaking at a stop sign is one of many actions a driver is responsible for managing in the process of driving, along with actions like maintaining speed, staying within the lanes, avoiding collisions with obstacles, and path planning efficiently to a destination. It is also just one of the processes that many vehicles offer autonomous control over today, along with cruise control, lane assist, collision detection and many more. Each of these other control categories follow a cognitive pattern like the stopping procedure.

This is just one example of the multitude of closed loop optimization scenarios - in which a driver is responsible for establishing an objective, observing his current state, inferring what interventions would manipulate that state in the direction of his objective, making rapid future predictions about his state according to possible manipulations, creating an optimal decision strategy to meet that objective, and then act according to his decision strategy.

When engineers or data scientists venture down the path of AI and autonomous systems—be it self-driving cars or self-optimizing manufacturing procedures—the fundamental objective is to instill these same human-like abilities—to observe, infer, decide and act—into the systems we seek to optimize.