Practical Strategies for Using Generative AI Tools in Your Organization

According to our latest State of Smart Manufacturing Report, 83% of manufacturers surveyed anticipate using Generative AI in their 2024 operations. While ChatGPT and other Generative AI tools carve the frontier of AI maturity, a common question amongst product development organizations is "How do I put these tools into practice for my organization?”

In this article, we explore practical and tactical strategies for taking advantage of Generative AI tools, including:

- Avoiding searching for a solution before defining the problem

- Ensuring governance is part of your information security strategy

- Opting for purpose-built agents vs. general-use models

Problems First, Solutions Next

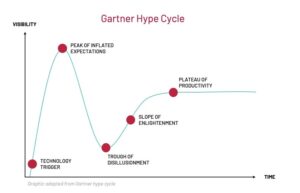

In terms of the Gartner Hype Cycle™, Generative AI sits between the Peak of Inflated Expectations and the Trough of Disillusionment. While some early movers lost hope from failed experiments, others still dream Generative AI will solve all their problems. By defining your business’s big bet and benefit use cases and aligning those goals to the right enabling technology, you can steer away from these missteps and walk on the desirable Slope of Enlightenment.

To accomplish this, start by defining the use case, considering these questions:

- What challenge do we face? (Articulate the problem statement)

- Who does the problem affect? (Identify the target personas)

- How does the problem affect them? (Assess the potential value)

- What consequences arise if we ignore the problem? (Determine the urgency)

- How will our world be different if we solve the problem? (Define success)

After clearly articulating the problem statement and defining the benefits, you can then assess the best tool available to solve the problem. Generative AI’s power comes into play primarily for content consumption (summarization, search and other knowledge acquisition tasks), content creation (drafting, ideating, auto-filling) and in new technology creation (innovations made from multiple AI components). Casual AI, or “traditional” machine learning, serves more deterministic needs, such as forecasting, anomaly detection and outcome optimization.

Considering this question of technology fit, let’s look at two contrasting approaches to getting started.

Wrong path: Defining the Solution Before the Problem

“We have an issue with root cause analysis from quality incidents. Can we use an LLM to sift through and guide actions from quality incident data to determine the root cause?”

Although we stated a problem and postulated using an LLM for a language-based task (summarization and deduction), we concluded an LLM to be the solution before we fully articulated the problem. This solution-first thinking could result in biasing the problem-solving process and potentially lead down the path of building a solution without sufficient ROI, especially concerning if a simple solution such as an “if/then” rules engine is all that’s needed.

Right path: Determining the Problem Paves the Way for a Solution

“We have an issue with root cause analysis from quality incidents. When the engineering team receives quality incident records for review, they find 50% of the records are misclassified and 60% are incomplete. This leads to unnecessary time re-routing incidents and hunting down people to fill in the missing information needed for root cause analysis. On the other hand, operators cite spending 15 minutes on average to log a quality incident, and when they are too busy, they only record the minimal information required. We need to make it easier for Operators to properly record complete quality incidents and help engineers quickly understand and act on them. “

In the right path version, we clearly describe our challenge, the quantifiable business consequences, the impacted groups and what we wish were better. With all that information, we might conclude that two solutions are needed – the first is an LLM that can consume quality incident text and auto-classify the type of incident based on a pre-defined picklist. The second could be a combination rules engine and LLM to streamline the creation of quality incident records, with rules controlling for required fields and the LLM offering auto-fill suggestions to speed up the data entry process.

A successful business case leads with the value proposition and then finds the right tool for the job. The tool should work with the structure and nature of the data (natural language, time-series data, hierarchical data structures, etc.), the output (probabilistic vs. deterministic), and the complexity of the problem.

Investing in Governance & Security

As you move from identifying use cases to putting them into action, prioritizing information security is crucial. The first step is choosing a platform that offers a private cloud version of these advanced models (such as Azure’s OpenAI Services or Google’s Vertex AI) for your enterprise. This ensures your intellectual property doesn’t contribute to training publicly accessible models, however, security measures and safeguards against misuse must go further. Robust governance frameworks and multi-layered security protocols are essential to project your data, maintain compliance and mitigate risks associated with enterprise AI deployment.

But don’t limit governance to just restricting what can be done with these models. To unlock the power of democratizing data science and bring a diversity of end users into the solutioning process, the group that defines how not to use Generative AI tools should also guide how to use them. Disseminating knowledge through a variety of mediums will help broaden the audience and meet users where they are in their AI journey. This should include a mix of formal training, prompt engineering guides, a community of practice forums and sharing compelling case studies.

Three categories of security and governance to consider include:

- Data protection and access controls: Data anonymization mechanisms, role-based access controls and other data exchange safeguards help to protect against data leaks of sensitive information such as personally identifiable information (PII) and confidential company data.

- Usage guidelines and guardrails: Ethical use guidelines, prompt shields and output validations, for example, control how users interact with the model and act on it, mitigating risks such as bias, hallucinations or general misuse.

- Auditing and performance monitoring: Third-party risk assessments of selected vendors, additional security testing for vulnerabilities and continuous model performance monitoring and updates add extra security layers to address the unique vulnerabilities presented by these novel solutions.

But don’t limit governance to just restricting what can be done with these models. To unlock the power of democratizing data science and bring a diversity of end users into the solutioning process, the group that defines how not to use Generative AI tools should also guide how to use them. Disseminating knowledge through a variety of mediums will help broaden the audience and meet users where they are in their AI journey. This should include a mix of formal training, prompt engineering guides, a community of practice forums and sharing compelling case studies.

Integrated, Purpose-Built Agents

Many AI use cases seek to increase productivity, but AI alone is not enough to improve work processes. The most valuable productivity gain emerges when pipelines and processes are integrated to bring AI insights and assistance to users when and where they need it. This could be within worker productivity tools such as Teams, enterprise applications such as PLM and MES, or even right on an HMI or VR headset. Regardless of the destination, human-centered design along with traditional software development and data engineering are essential to implementing a successful workforce-multiplying solution.

Human-centered design approaches such as persona definition, process journey mapping and voice of the customer feedback cycles aid in translating AI outputs into human-digestible and actionable insights. For Generative AI models, these approaches emphasize a specific user’s needs as opposed to a generic user. Traditional software development then brings the full pipeline together through database integrations, APIs, pre-processing model inputs, post-processing outputs, and UI development.

Let’s look at how human-centered design can be used to transform a frustrating, manual process into a seamless AI-integrated workflow.

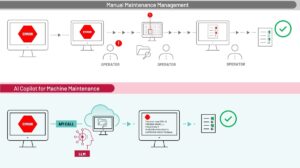

Maintenance issue handled manually

A machine failure code appears on the HMI. In the best-case scenario, the operator reads and interprets the message, searches the document database to find the relevant SOP, then identifies and performs the troubleshooting steps outlined within the document. More often, the operator reads and interprets the message, relies on previous knowledge, then identifies and performs the troubleshooting steps outlined within the document. The issues here are that experienced operators are aging out of the workforce and information is often not so easy to find for those with less experience.

Maintenance issue assisted by an AI copilot:

A failure code appears. An API call invokes an LLM supplemented with a RAG (retrieval augmented generation) based information store to search for the relevant troubleshooting steps across a library of maintenance manuals and SOPs. The result is displayed on the HMI with a natural language response guiding the end-user on how to perform the troubleshooting steps and offering a conversational assistant to help dig deeper into the issue.

Summary

The opportunity for Generative AI is undeniable but finding your organization's path to value requires scrutinizing the use cases, putting in place the safeguards to maintain information security and putting the tools in the hands of your people in a way that makes their work more seamless, productive and of higher quality.

Those able to navigate these hurdles effectively will position themselves for success in harnessing the transformative power of Generative AI to drive efficiency, innovation and gain competitive advantage.